Why doesnt GA4/Looker Studio match GA4 BigQuery?

So you’re comparing your GA4 BigQuery numbers with the GA4 interface or the Looker Studio Connector.

And guess what? They don't match.

Well there a quite a lot of places to check. Let's dive in.

GA4 BigQuery numbers come from a different source than Looker Studio/ the GA4 Interface

Let’s start at the beginning. Here’s how Google processes our GA4 data.

As you can see the raw GA4 event data getting sent to BigQuery, is technically the same data you see in the UI.

But you get access to it a lot earlier and without any of the guardrails that you get in the UI.

All those arrows that move between steps?

That’s where most of the decisions are made which cause the numbers to fall out of sync.

Are you a Pipeline customer?

Time to stop reading this blog post.

- Pipeline already does all of the practical actions that you can do to make these line up.

- Come and talk to our support we can help you figure this out and explain it!

Not a pipeline customer?

Read on (but also take a look at the product, it’s really good for this stuff!)

There are 3 types of number discrepancies

This could be either that:

- Your simple metrics are wrong (i.e. sessions)

- Your breakdown of dimensions across metrics are wrong (e.g. your sessions by channel are wrong)

- Your complex metrics are wrong (engagement time)

So let’s dive in.

The first step - pick a single day to compare numbers on

We literally can’t say this one enough.

Pick a single day.

The number of times we see people trying to debug this and they have 2 months selected. It’s going to make it harder to find examples and makes the whole thing more error prone.

Start with one day.

If that’s correct, try a different day.

And if that’s all good now is the time to start trying longer date ranges and figure out if it’s an over time problem.

Bucket 1: Simple metrics are wrong

Ok so suppose your simple metrics are wrong.

i.e. number of sessions or page views, for example.

What could be happening here? Let’s start ruling out some options.

1. Possible Cause: Data Freshness

Potentially an obvious one, but if you are looking at really recent data not all the data will be complete.

- The GA4 BigQuery streaming export exports data continuously throughout the day but not 100% in real time. If you have both streaming and daily tables, the streaming table is removed once the daily table is finalised.

- The daily table produces a single, stable table for each day.

In both cases GA4 takes up to 72 hours to finalise.

Now this difference is usually pretty small for most sites, but it’s an easy one to catch.

How do you know if you have this problem?

Are you comparing time periods that include the most recent 3 days? You might have it.

How do we fix it?

When you’re choosing your date range, compare dates that are more than 3 days old.

2. Possible Cause: GA4 Raw Data Daily Limits

The GA4 BigQuery daily data table has daily limits of 1 million events per day (or 20 billion events per day for 360 users).

This is large but not infinity and it’s very possible to hit it.

How do you know if this a problem?

If you do hit this, Google will start emailing you and threatening to stop the export at which point you will have no data.

You can also check your total number of events in the explore report.

How do we fix it?

What could you do about it if you’re hitting that limit?

- Upgrade to Analytics 360 - but that costs.

- Switch to streaming. Costs far less! But the data export is slightly less rich. (If only there was a tool which could help you!)

- Remove some events which are less important.

Best candidates for removal are often:

- Scrolls

- Custom events which duplicate pageviews e.g. “product view”

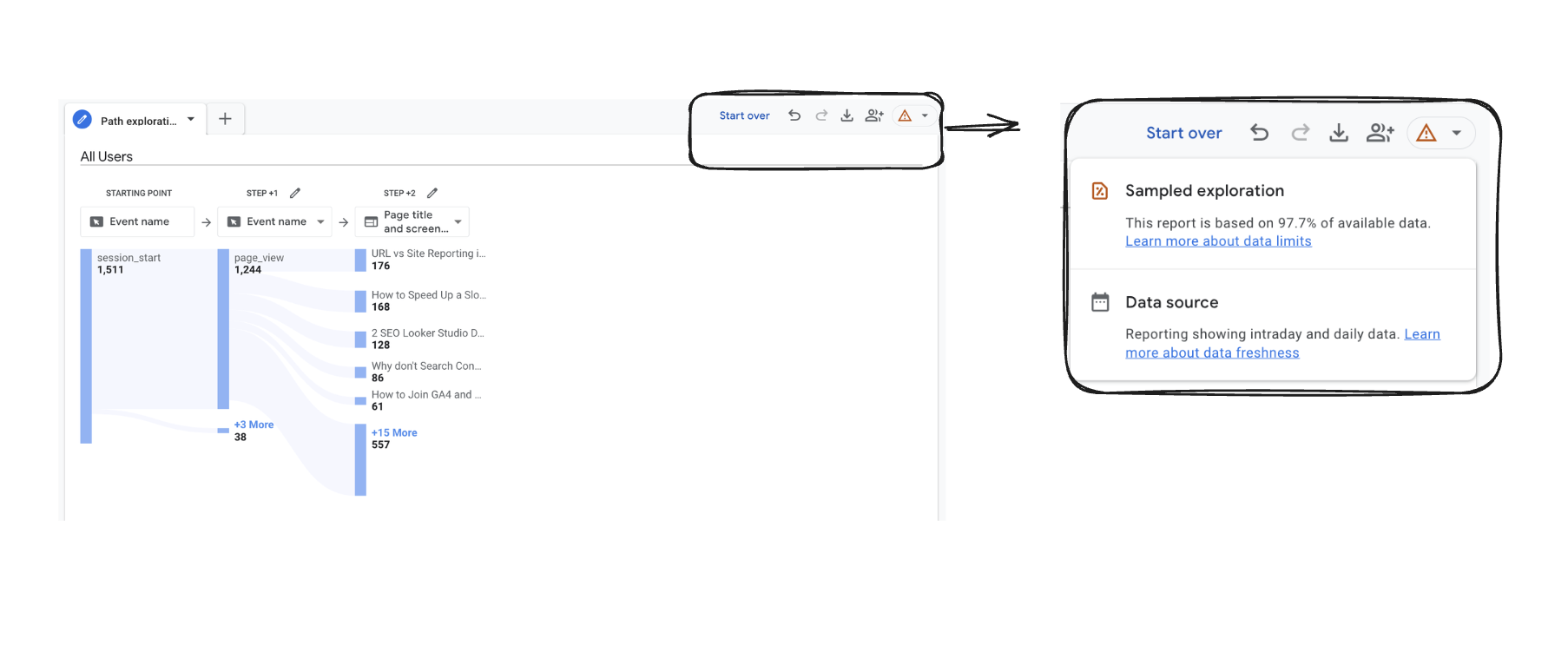

3. Possible Cause: API Sampling Issues

The raw BigQuery data doesn’t have sampling.

But the UI and Looker Studio connector do.

How do you know if this is a problem?

The easiest way to check whether your GA4 data is sampled is to look in the corner and look for a red warning sign (see below). This will tell you if it’s sampled.

When it comes to Looker Studio, there is often sampling.

If you’ve got more than 10 million events in your window, it’ll start sampling and things won’t line up.

Looker Studio just won’t tell you. Google’s documentation states that “Looker Studio doesn't indicate if data from Google Analytics is sampled” which is not the most helpful thing 🤷

How do we fix it?

Two options:

- Use your BigQuery export safe in the knowledge it isn’t sampling.

- Compare a single day, which for most properties will allow you to validate your numbers without sampling.

- Download our API data day by day.

4. The GA4 BigQuery Streaming Export is not 100%

The GA4 streaming export will often be 0.5-1% off for larger sites.

Google describes the streaming export as a “best-effort operation”. In practice this often means you’ll see a loss of at least 1% data.

How do you know this is a problem?

Method 1:

Compare the UI to the raw event count in BigQuery.

Method 2:

Export daily and streaming tables.

Get the final event count in the streaming tables before they finalise then compare with daily.

How do you fix it?

- Reduce your export down to 1 million per day and use daily.

- Pay for 360.

- Accept that with ad-blockers this was always going to be marketing level data anyway (i.e. your CFO will have different revenue numbers). The point is be relatively accurate not absolutely accurate. And then save a lot of money.

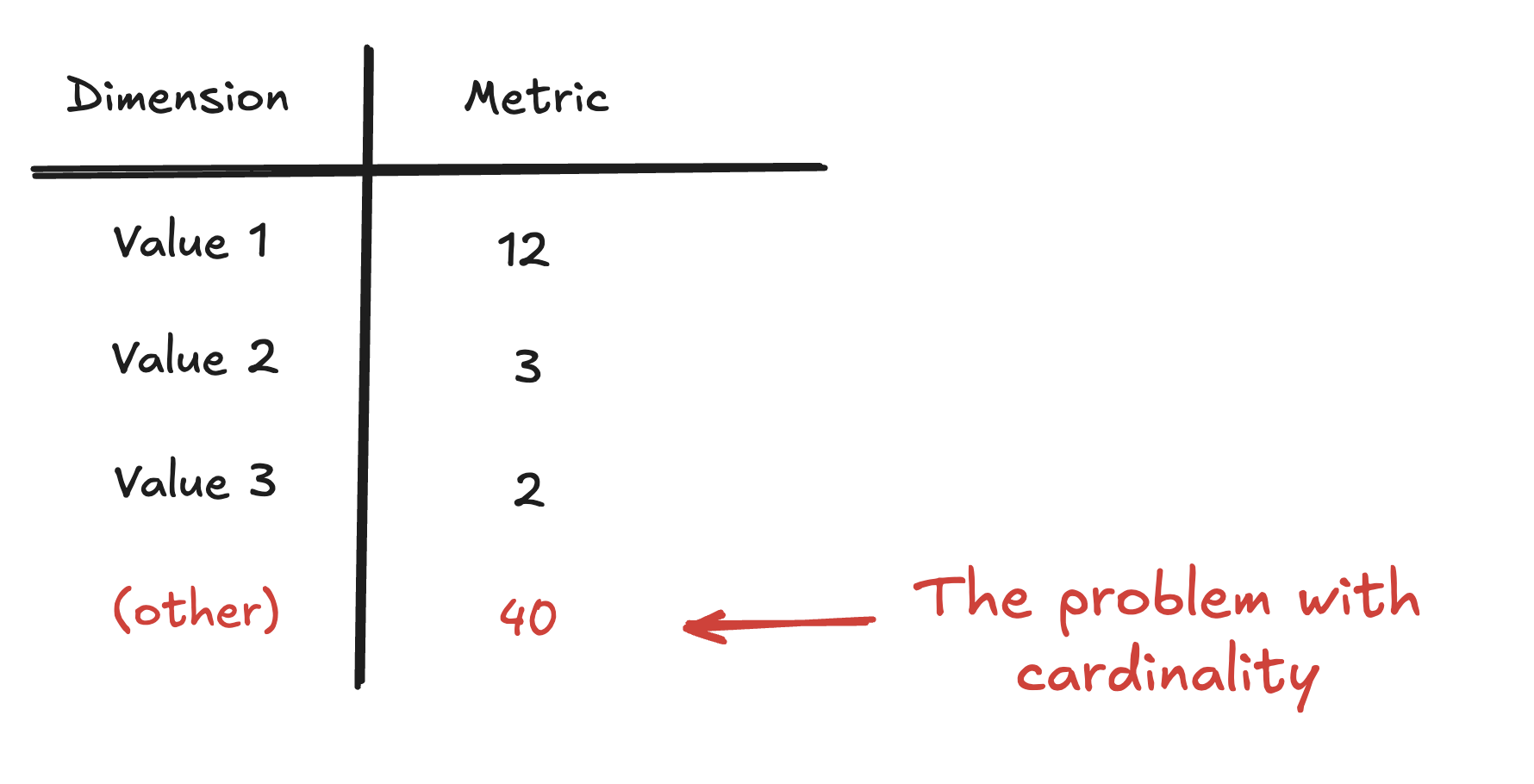

5. The GA4 API uses HyperLogLog++ sampling

To make expensive calculations fast GA4 uses an estimation method called HyperLogLog++ sampling to handle cardinality.

For those of you who have forgotten what that is:

- When you have lots of different values for a column. That’s high cardinality. It’s slow to work with.

The interface will do something like this:

Google needs GA4 to work quickly for lots of websites, so they use a function called HyperLogLog++ to make it run more quickly.

Almost every implementation of GA4 BigQuery (Pipeline included) doesn’t use this and uses exact counts.

How do you know if you have this problem?

You can technically recreate this formula in BigQuery to check if this is the problem:

But this method only tends to account for very small differences in our experience and it normally isn’t this.

How do you fix it?

So technically with GA4 BigQuery you probably have more accurate metrics.

If you need them to really match temporarily I would follow the blog post above, but I would probably say it’s not worth your time. Rule out literally everything else and don’t expect the numbers to be perfectly identical.

6. You got the basic formula’s wrong

Hopefully unlikely, but it’s worth just double checking.

How do you know if you have this problem?

If you’re only checking sessions, check page-views first.

Page views is the single simplest metric to build in BigQuery.

You literally just count events with the name page_view.

If you can’t get that to line up it’s most likely a different reason.

How do you fix it?

We’ve got a whole article on how to correctly measure sessions.

Has your data been passed in via a third party?

If you’ve seen an unexpected extreme drop in traffic, this might be your issue.

This is more of a technical point, but we’ve seen cases where ga_session_id (i.e. the ID for a session) is passed in as a string from a 3rd party, this will cause no sessions to be tracked in the interface.

A specific one, but may be relevant for your scenario.

7. Are you doing incremental builds?

Sessions have some fiddly problems with incremental builds.

Broadly the logic is as follows:

- Sessions can cross midnight.

- This means with incremental builds you can count the same session in two days.

That’s bad.

How do you know if this is a problem?

If you’re doing incremental builds with sessions and you haven’t thought of this you have this problem.

You can also count sessions without using incremental builds to check if your overall numbers are correct.

How do you fix it?

You look at the sessions you worked with the previous day and don’t re-generate them.

Bucket 2: Your breakdown dimensions are off

Ok so your overall sessions and metrics are ok, but the breakdowns of those sessions are wrong.

What’s happening now?

First off is it do with traffic source? 90% of this issue we see is to do with traffic source.

8. Are you using the same traffic source field?

There are a load of different GA4 traffic sources. If you want to deep dive, go to this blog.

Broadly if you’re not comparing apples to apples this won’t work.

How do you know if this is a problem?

If you’re not using:

traffic_sourceand comparing to users- This is the first medium/ source etc which brought the user to the site.

- It compares with Reports > Acquisition > User Acquisition

session_traffic_source_last_clickand comparing to sessions- This is the session medium/source using last non direct click attribution.

- It compares with Reports > Acquisition > Traffic Acquisition Session

How do you fix it?

Pick one of the fields mentioned above.

Now you can make it line up with the event level medium/source and there are benefits to this:

- You can change attribution models.

- If you want to segment sessions to create the old UA session model and get better multi touch attribution you can.

- It works for all of history,

session_traffic_source_last_clickis a relatively recent field that was added in July of 2024.

It's what we use at Pipeline, but it does take more work and leads us onto this problem:

9. Do you have 90 days worth of data?

If you are manually calculating it then we get onto our next set of issues.

By default, the GA4 interface uses Last Non Direct Click Attribution (LNDC).

LNDC ignores direct traffic and attributes all of a traffic source to the last channel/ traffic source that the user clicked on from before visiting the site.

The lookback period for this is 90 days.

If you do not have 90 days worth of data yet then your numbers will be off. You will need 90 days and then calculate the day with the 90 days lookback. I.e. if you have 95 days of data then technically only 5 will be guranteed to be accurate.

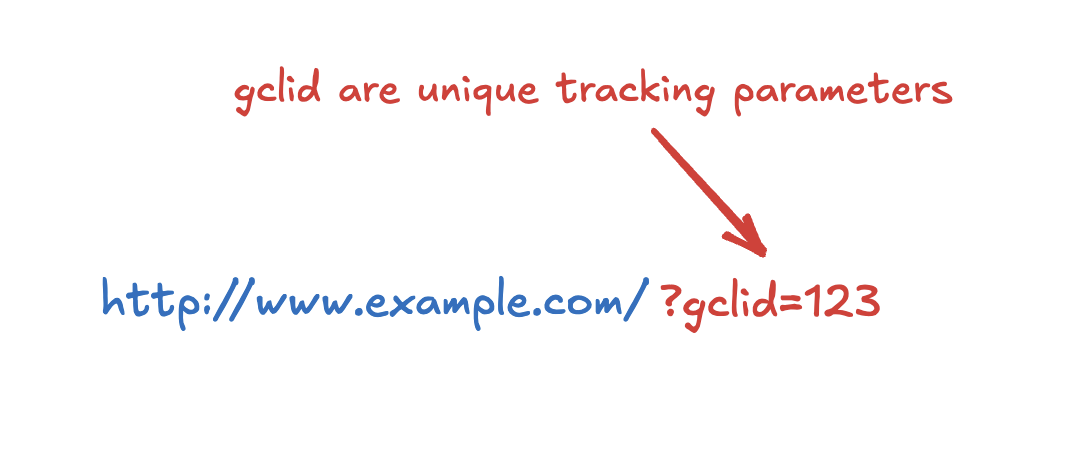

10. GA4 BigQuery has had long standard issues misattributing paid traffic

The tracking for Paid sources of traffic are through URL parameters.

See the example below of gclid which is used to track Google Ads clicks.

There are also Google’s new URL parameters for ad tracking

- wbraid

- gbraid

Sometimes the GA4 BigQuery export doesn't attribute paid search correctly. In other words, there were URLs which contained gclids, but they weren’t being attributed to cpc.

How do you know if you have this is a problem?

If your paid channels don’t add up but your others channel do, this is probably the issue.

How do you fix it?

Look for the existence of the parameter (e.g. gclid, wbraid etc.) and manually set the medium to cpc.

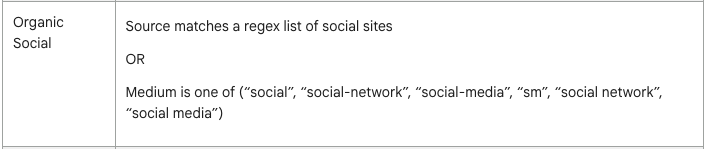

11. Default Channel Groups have some strange logic

We’ll have a full blog post on this, but the short version is:

- Sometimes the default channel groupings won’t bucket things how you expect

This means when you’re trying to re-create this in BigQuery you can get caught out.

Here’s an example of Google’s criteria for organic social:

Seems pretty simple? The "source matches the list of sites" is where things often get messy. Gmail? Often rolled into organic search. Blerg.

How do you know if this is a problem?

The best way to debug this is to compare the UI and BigQuery at medium and source level.

You’ll discover discrepancies and then that will show you how your channel groupings might be bucketing things differently from the UI.

How do you fix it?

Here are some common issues we’ve seen:

- Organic Search doesn’t match

- Sometimes GA4 will misclassify smaller search engine traffic as referral e.g. yahoo (it still exists!)

- Cross Network doesn’t match

- You have to get this data from session_traffic_source_last_click, it relies on data not captured in GA4 and shared from other Google sources.

- Paid channels don’t match

- Mentioned this one above, this is usually due to medium being set incorrectly and GA4 missing the existence of parameters

12. You’ve accidentally used the wrong scope

GA4 UI, LS and BigQuery will all let you break scope when calculating your analytics.

This can break your numbers and cause you problems.

(We have a whole blog about GA4 scoping which would be worth reading, but we’ll TL;DR you here).

How do you know if this is a problem?

Are you following the table below:

If you’re not your breaking scope. The easiest way to explain this is with an example.

Suppose we build a session in explore with:

- users

- country

- sessions

And then we add the dimension:

- session medium

- I.e. where did each session come from

If we do that we’ll over count users, because the same user has multiple sessions.

How can you fix it?

Follow the table above. Only use the correct metrics with dimensions.

Bucket 3: Your complex metrics don't line up

Hmm ok so this one is relatively hard. The problem with the more complex metrics is broadly that:

- They're fiddly to calculate.

- They have weird edge cases.

- If you don't account for both of them it's very easy to get incorrect numbers.

Here are some of a large list of issues:

- Sometimes GA4 will drop events causing events built off other events to be missed.

- Most user metrics have to be calculated in a BI tool because you need to count the user ID.

- If you started using user_id part of the way through you the UI will have changed but BigQuery will just give you all the raw to calculate it yourself.

- App first_open events sometimes don't have sessions.

And so on into the void. If you've got specific questions at this point drop us a message/tweet on social and we'll see if we know!

Also:

If you’ve gotten this far, why not try out Pipeline?

Our GA4 - Pipeline product is designed to give you production grade GA4 BigQuery data without any data engineering needed.

We take care of all the complex SQL as well as providing you more powerful features like the ability to create more complex conversions, session dimensions & metrics, user groups, multi touch attribution (we can split sessions on source just like UA used to), spam filters and so on.

You can then access the tables directly in BigQuery or with our own Looker Studio connector.

If you’re interested, either get a free trial and play around or book an initial meeting.