How to query your streaming & daily GA4 BigQuery tables

Let's talk about how to select date ranges with the GA4 BigQuery export.

We'll cover:

- A single day - 2025-02-01

- A range - All of February 2025

- A dynamic range: The last 30 days.

Let's dive in.

Can't we just query date columns?

Are you already familiar with BigQuery and thinking, "I can just query a date column"?

Unfortunately not. There is a date column, but unlike normal you can't just use it to select date ranges.

Why?

Typically analytics data in BigQuery is partitioned, however the GA4 data is sharded.

If you just query a date column in the GA4 export, you'll probably only get a single days data.

Let's unpack that jargon.

Building GA4 BigQuery yourself?

Need a example GA4 BigQuery query to get started? We've got a session table query with all the default dimensions and metrics you can paste into Looker Studio.

Get up and running in 15 minutes

Our Pipeline product lets you skip all the data engineering and SQL. GA4 BigQuery data with custom dimensions, user properties, multi touch attribution, lower BQ costs and more out of the box.

What is the difference between sharded and partitioned data?

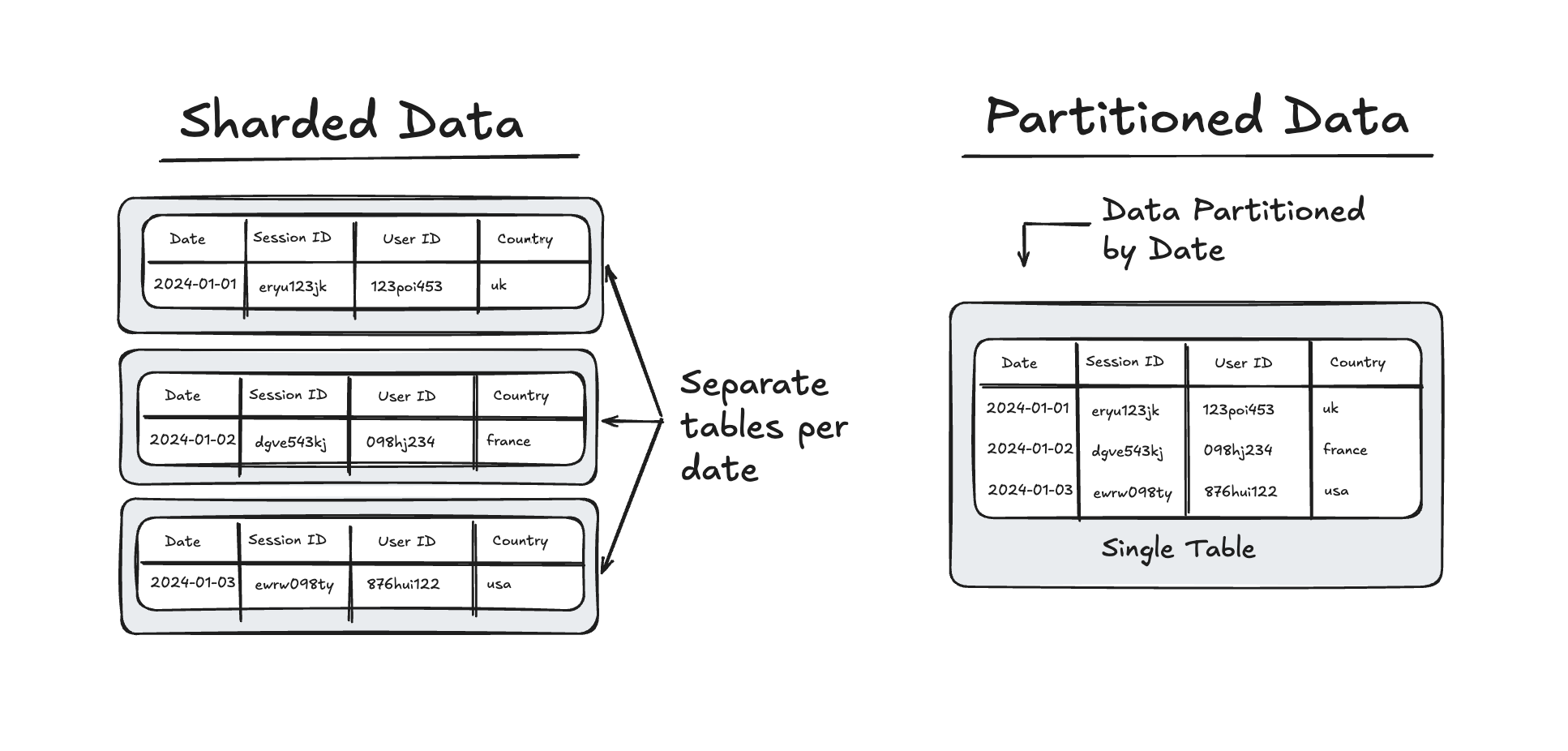

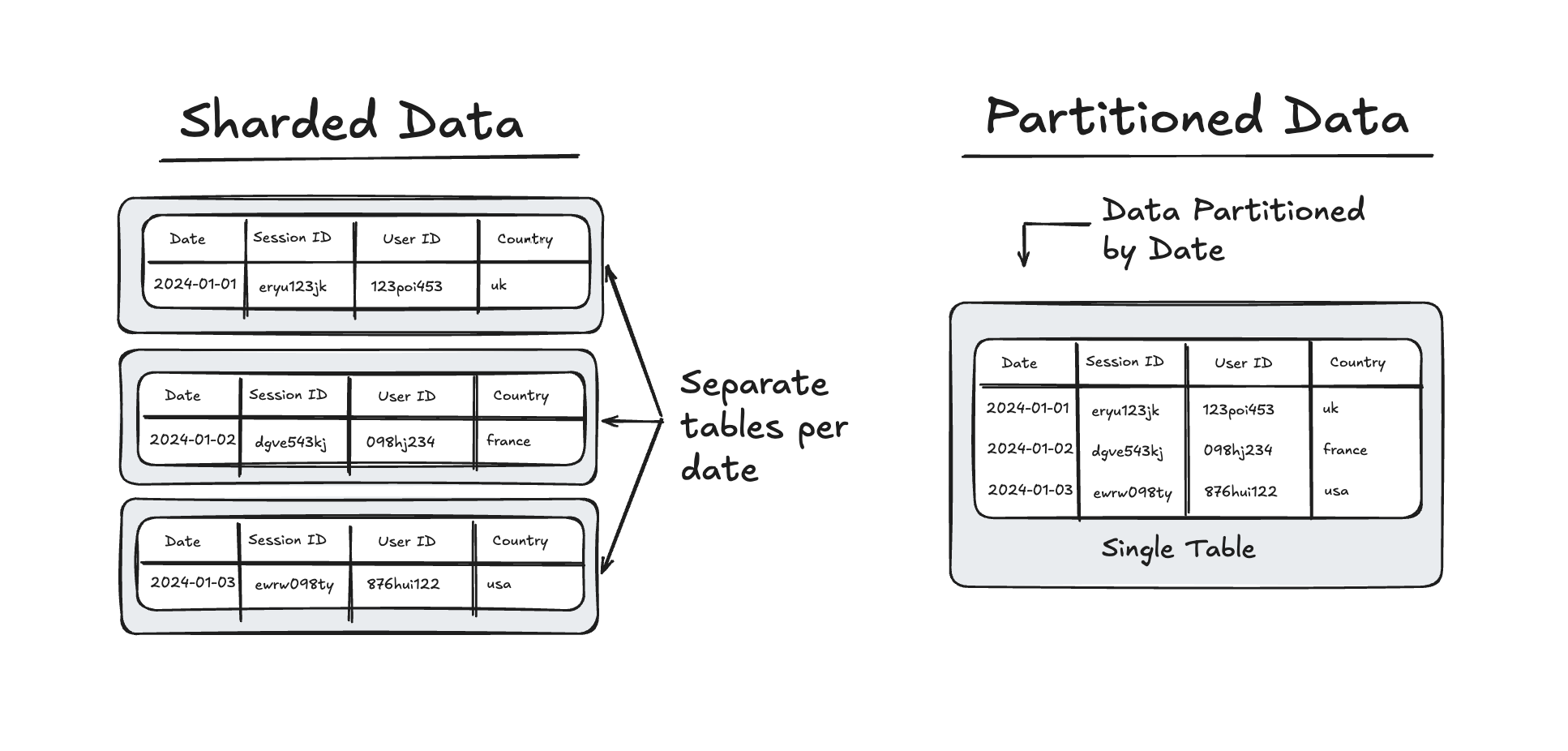

Over time data tends to be stored in one of two ways - partitioned or sharded. (This is because it makes it cheaper to query.)

A partitioned table is a single table where the data is divided into smaller, more manageable segments (partitions), based on a specific column (e.g. a date column).

A sharded table, on the other hand, involves splitting data across multiple tables or databases. This means that each “shard” is an independent table.

GA4 exports are sharded tables. There is one table per day. So to query a date range we need to query multiple tables at once.

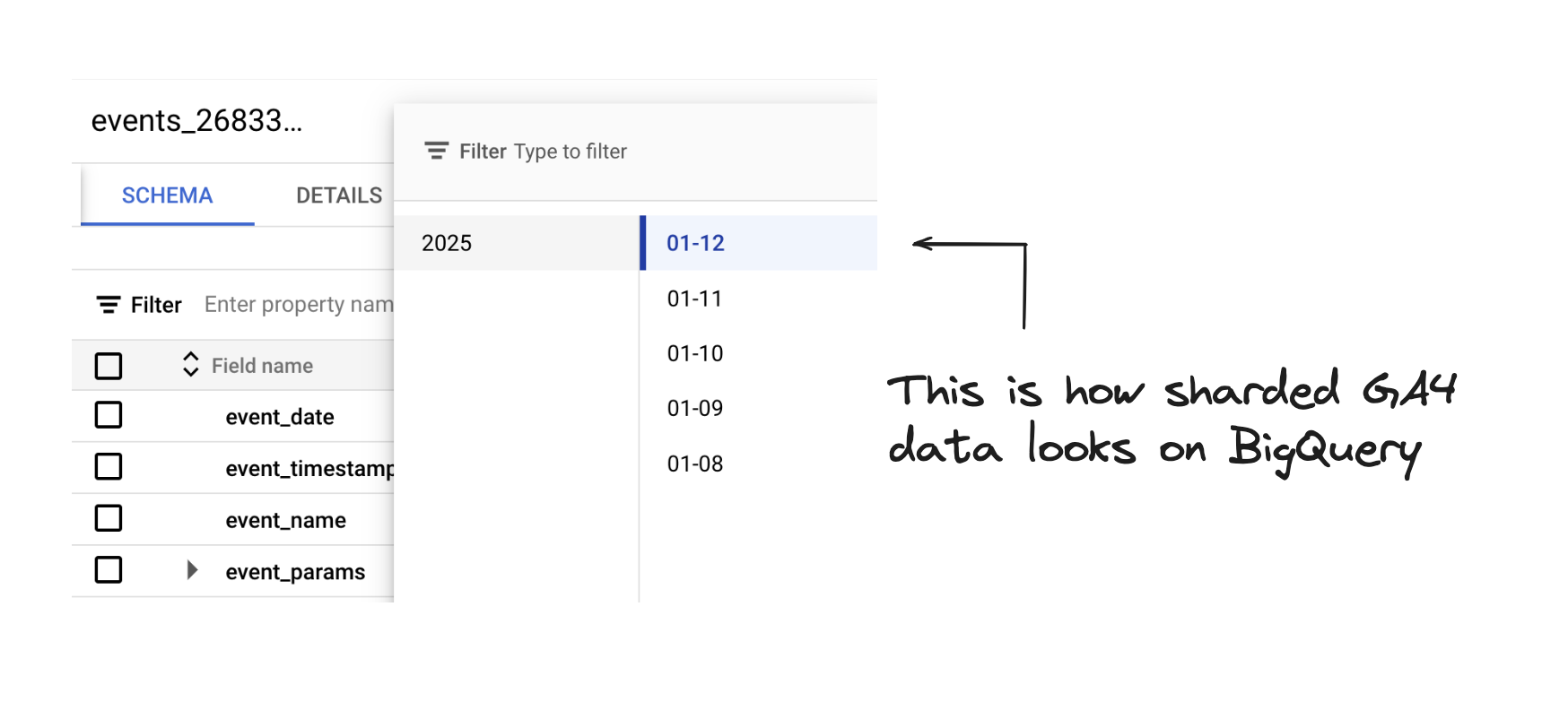

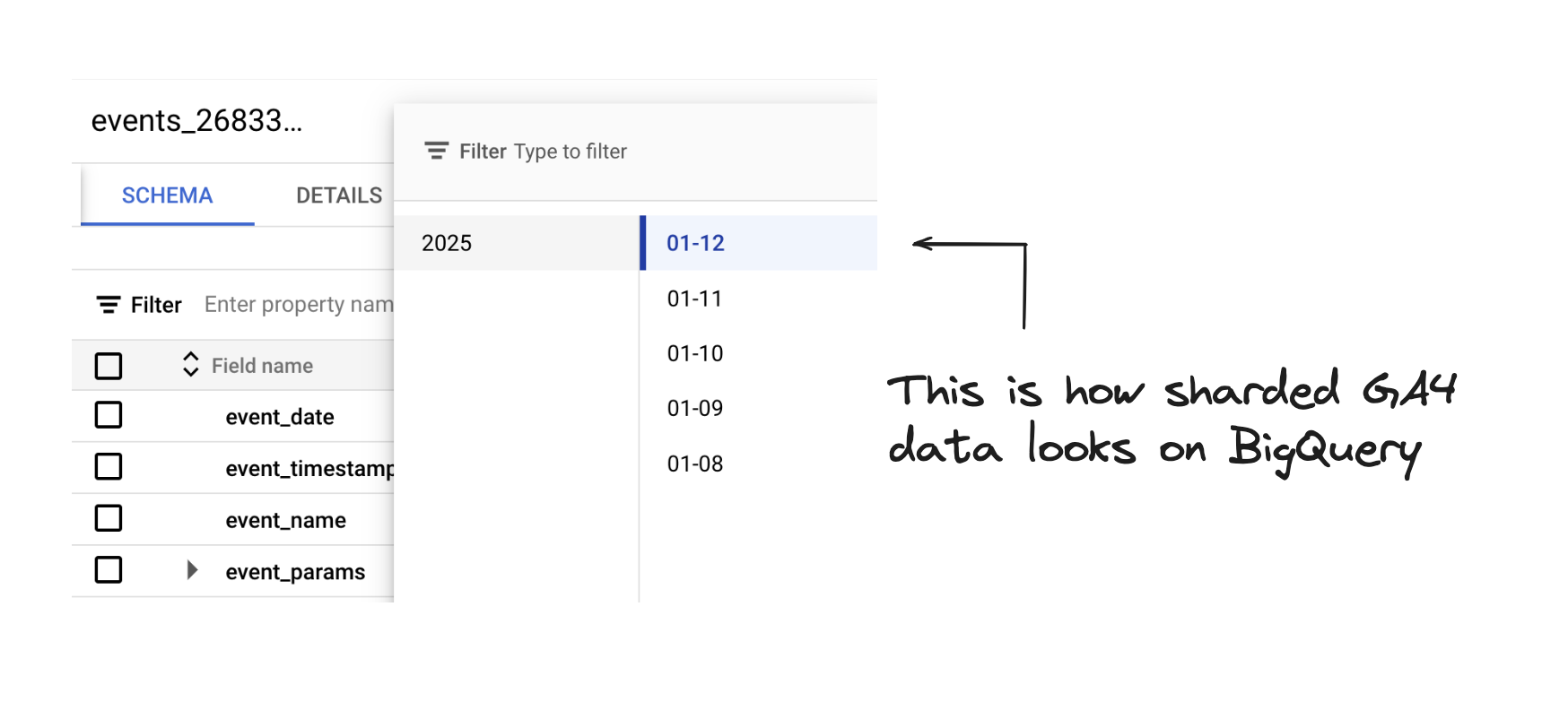

What tables does the GA4 BigQuery export create?

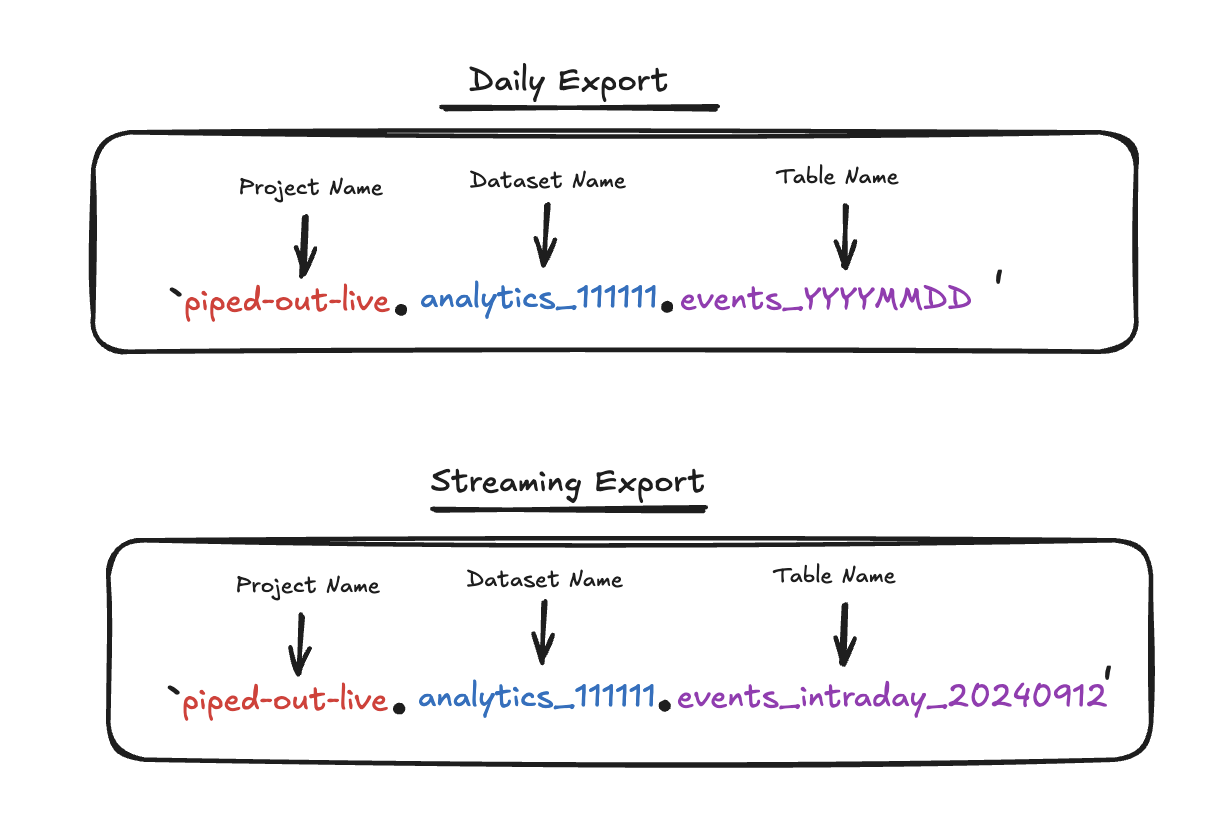

Depending on if you are using the streaming or daily export, it makes one of two different tables.

Streaming:

For each day, the streaming export produces one new table. Data is exported continuously throughout the day.

These are events_intraday_YYYYMMDD tables.

Daily:

The daily option produces a single, stable table for each day which contains a full export of a days events.

These are events_YYYYMMDD tables.

What is the difference between sharded and partitioned data?

A partitioned table is a single table where the data is divided into smaller, more manageable segments (partitions), typically based on a specific column (e.g., a date column).

A sharded table, on the other hand, involves splitting data across multiple tables or databases. This means that each “shard” is an independent database containing a subset of the data.

In the case of the GA4 data for the streaming and daily tables, this is based on the date.

GA4 BigQuery export tables have a consistent structure

There are 3 parts to the location of our exported tables:

- Project Name

- Dataset Name

- Table Name

The Table Name updates based on the date.

Everyday Google creates a new table with a different table name

Here's an example location of a GA4 BigQuery table.

We've got:

alcoholic-anteater- The project nameanalytics_111111111- The dataset nameevents_intraday_20240912- The table name

Each day it adds one more table with a different date. In the example above, we've got two tables for the 12th and 11th September 2024.

How can we select date ranges using SQL for sharded tables?

If you just want to grab the queries we've got a widget for that, scroll down and you can copy and paste to your hearts content.

But if you'd like to understand the logic, let's take a look.

Logic: How to calculate Sessions for an individual day

If you’re only going to query a single day, you can just reference the individual table that corresponds to that day. Easy peasy.

This query calculates the number of sessions for a single day. In this case 12th September 2024.

Let’s talk about: table_suffix

Logic: Calculating Sessions for a longer time period

Let’s say that you want to query a longer time period.

_table_suffix allows us to query multiple tables using a wildcard value.

What we’re doing is basically:

- Using

_table_suffixto select the number part of the table name - Run date logic on this part to just pull the tables related to a specific date period.

This is easier explained with an example.

Calculating Sessions over a fixed time period.

In this example, we calculating sessions over a longer time period between:

- 2024-08-20

- 2024-08-28

The key additions to enable this are:

- The wildcard (*) after the dataset name in the table selection.

- The

WHEREclause which specifies the date range.

This means your query will look different depending on if you are using the streaming or daily export.

- Streaming:

events_intraday_* - Daily:

events_*

So for the streaming export it might look like this:

The key part of this is:

Using the widget to calculate sessions

We’ve built a little widget which allows you to define the data set and date range which works using this logic.

This allows you to set the Project Name and Analytics Property ID which will automatically update the FROM statement in the SQL.

You can also custom set this based on whether you are looking at the Streaming or Daily GA4 tables.

A clever trick to make it work for events_ and events_intraday tables

Because the table names are slightly different, we've added a clever trick to make it work for both.

cast(regexp_extract(_table_suffix, r"(\d{8})$") as int64)

This regex extracts the date from either table name meaning you can use these queries for both streaming and daily exports.

Customise the queries.

Set-up variables to customise our BigQuery queries for your setup.

Calculating Sessions over a fixed time period using the table widget

-- This CTE extracts the Client ID (user_pseudo_id) and unnests event_params to extact

-- the session_id

with

unnest_data as (

select

user_pseudo_id,

(

select value.int_value

from unnest(event_params)

where key = 'ga_session_id'

) as session_id

from

-- This table will need to be updated to your GA4 BigQuery table

`@project.analytics_@analytics_property_id.@event_type*`

where

-- The date range being selected will need to be updated

cast(regexp_extract(_table_suffix, r"(d{8})$") as int64) between '@start_date' and '@end_date'

)

-- Count distinct user-session combinations

select count(distinct concat(user_pseudo_id, session_id)) as sessions

from unnest_data

;

Calculating Sessions over the whole time period.

In this example, we’re calculating sessions for all your GA4 data. This is fine for ad-hoc analysis but we wouldn’t put this into any reports!

What are we doing?

The key adjustment to enable is:

- The wildcard (*) after the dataset name in the table selection.

- Removing the

WHEREclause which limits the date range.

-- This CTE extracts the Client ID (user_pseudo_id) and unnests event_params to extact

-- the session_id

with

unnest_data as (

select

user_pseudo_id,

(

select value.int_value

from unnest(event_params)

where key = 'ga_session_id'

) as session_id

from

-- This table will need to be updated to your GA4 BigQuery table

`@project.analytics_@analytics_property_id.@event_type*`

)

-- Count distinct user-session combinations

select count(distinct concat(user_pseudo_id, session_id)) as sessions

from unnest_data

;Here is where we’re changing our select statement to * select all.

from

-- This table will need to be updated to your GA4 BigQuery table

`@project.analytics_@analytics_property_id.@event_type*`

;Calculating Sessions over a dynamic time period.

In this example, we’re calculating sessions for the 30 most recent days, excluding today).

What are we doing?

The key adjustment to enable is:

- The wildcard (*) after the dataset name in the table selection.

- Adjusting the where statement to use date logic

with

unnest_data as (

select

user_pseudo_id,

(

select value.int_value

from unnest(event_params)

where key = 'ga_session_id'

) as session_id

from

-- This table will need to be updated to your GA4 BigQuery table

`@project.analytics_@analytics_property_id.@event_type*`

where

-- Filter the tables based on the last x days

cast(regexp_extract(_table_suffix, r"(d{8})$") as int64)

between format_date(

'%Y%m%d',

date_trunc(current_date(), day) - interval 30 day

) and format_date(

'%Y%m%d', date_trunc(current_date(), day) - interval 1 day

)

)

-- Count distinct user-session combinations

select count(distinct concat(user_pseudo_id, session_id)) as sessions

from unnest_data

;The key part of this is:

from

-- -- This table will need to be updated to your GA4 BigQuery table

`@project.analytics_@analytics_property_id.@event_type*`

where

-- The 30 figure can be updated to another number of days to look back.

_table_suffix between format_date(

'%Y%m%d', date_trunc(current_date(), day) - interval 30 day

) and format_date('%Y%m%d', date_trunc(current_date(), day) - interval 1 day)

;Hopefully that’ll give you all the date logic you need to get rock and rolling with your GA4 events tables.