Setup: Connecting GA4 to BigQuery

Are you already exporting GA4 to BigQuery?

If you are already exporting your GA4 data to BigQuery then go to Step 3.

Pipeline will use the permissions that your email has so those need to be correct!

(If you need a service account please get in touch.)

If not, then start at step 1.

This can be broken up into a few stages:

- Setting up Google Cloud

- Setup the GA4 -> BigQuery connection

- Getting the correct permissions from Google Cloud

Once this is set up, you can then move onto setting up Pipeline.

Step 1: Setting up Google Cloud

In order to allow GA4 data to be pumped in BigQuery, you need to have a Google Cloud account set up.

In the process of setting up this cloud account, you'll set-up a project. This is where everything will live!

Google does a pretty good of walking you through these basic parts.

You can get started on this page.

Once you are set up, you will need to have a self-serve Cloud Billing account.

This allows you to automatically pay for your Google Cloud usage costs.

How much does it this cost you?

You might’ve heard terrible things about cloud bills, but don’t worry BigQuery won’t cause you any issues.

For context, for a site the size of Piped Out, which gets a couple thousand visitors a month costs us approximately $0.03 a month to run. And the first $6.25 every month are free!

Are you running a large property?

If you’re notably larger, then please take a look at the BigQuery costs page. The most important one is the cost to query a TB which is currently $6.25 per TiB.

It’s also worth noting we’ve helped a lot of customers massively reduce their data costs (sometimes 100x, depends a lot on your current maturity) with Pipeline.

If you are finding yourself spending a lot of money on BigQuery please reach out. There is a good chance we can help!

Back to setting up your billing account

Billing accounts can be set-up and updated on this page in Google Cloud

Important: Without billing details on your Google Cloud account, you’ll use the BigQuery Sandbox—a free, limited environment where data automatically expires every 60 days. Expiring data is bad as hopefully goes without saying.

Step 2: Setting up Google Analytics 4 to BigQuery Connection.

For 80% of people we can walk you through how to set this up relatively simply.

If you do run into issues then Google has a page covering all the possible scenarios

Let’s get started.

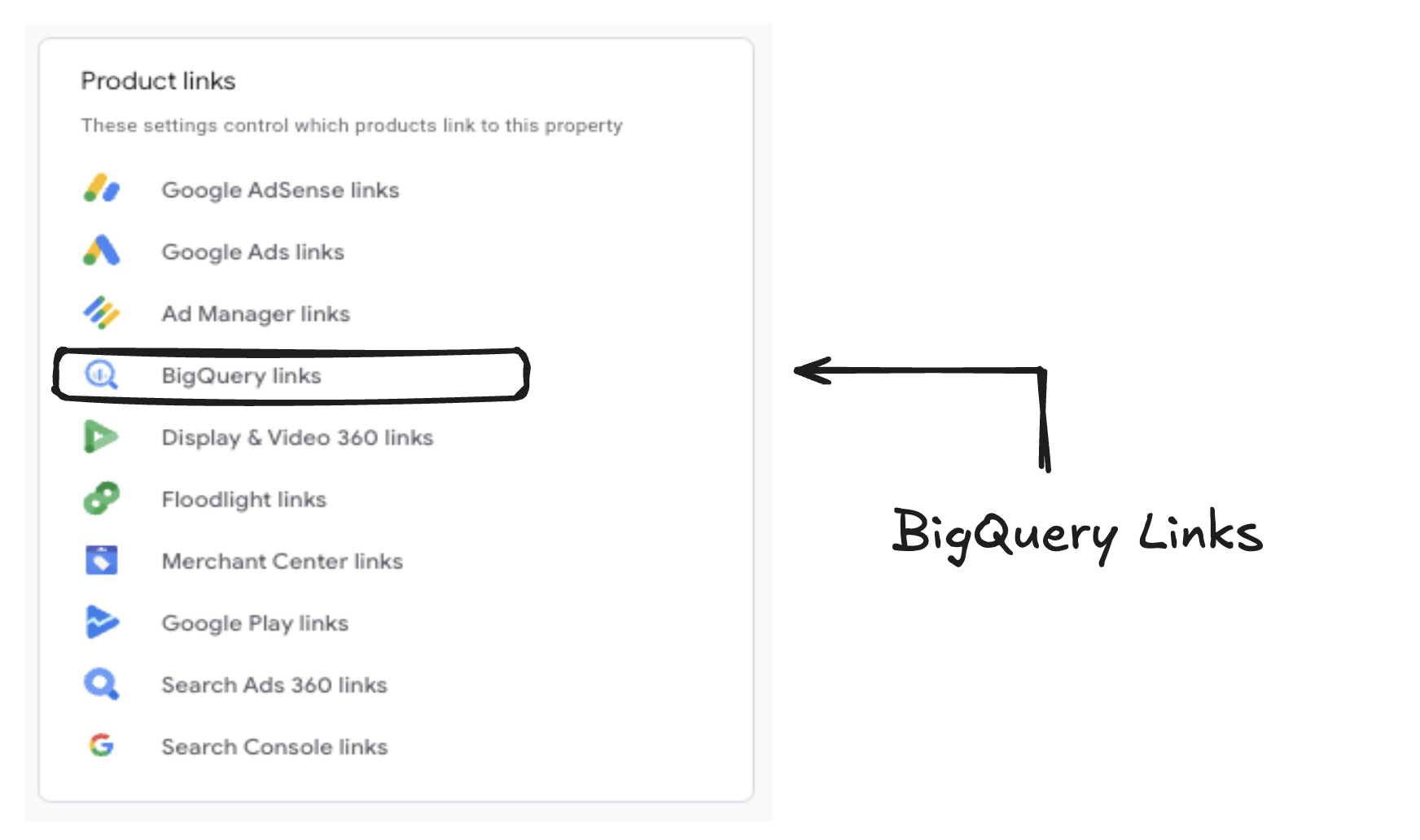

Go to Admin on GA4

You need to have Editor or above permission at the GA4 property level to set up this connection.

You will then need to clicks BigQuery links.

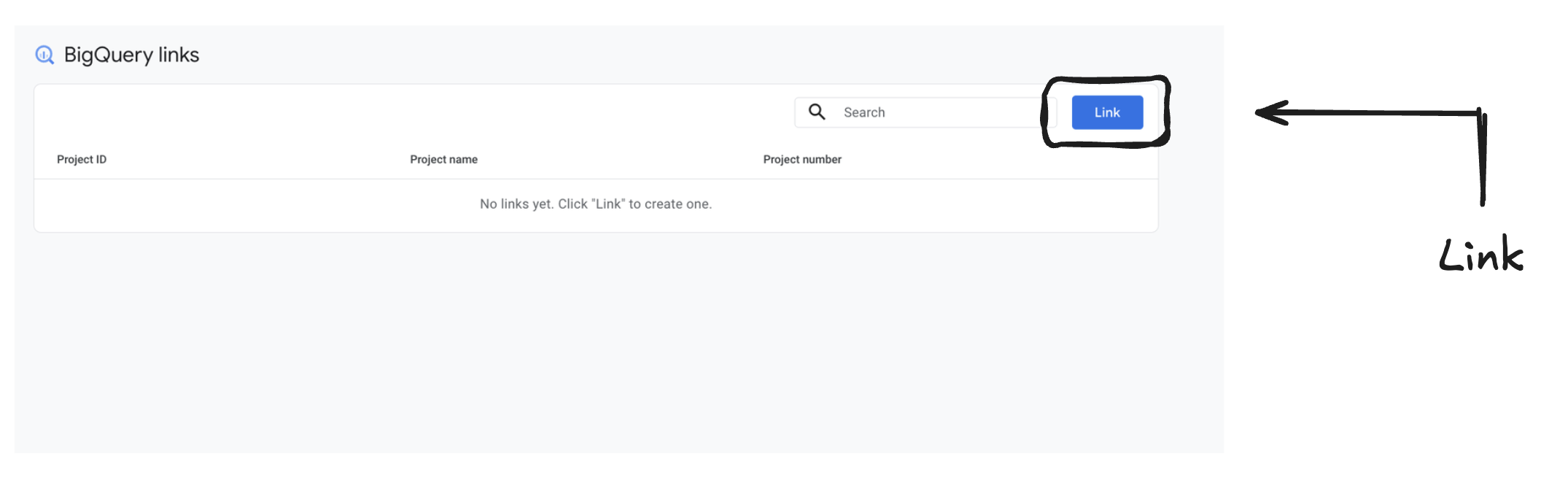

Then select Link.

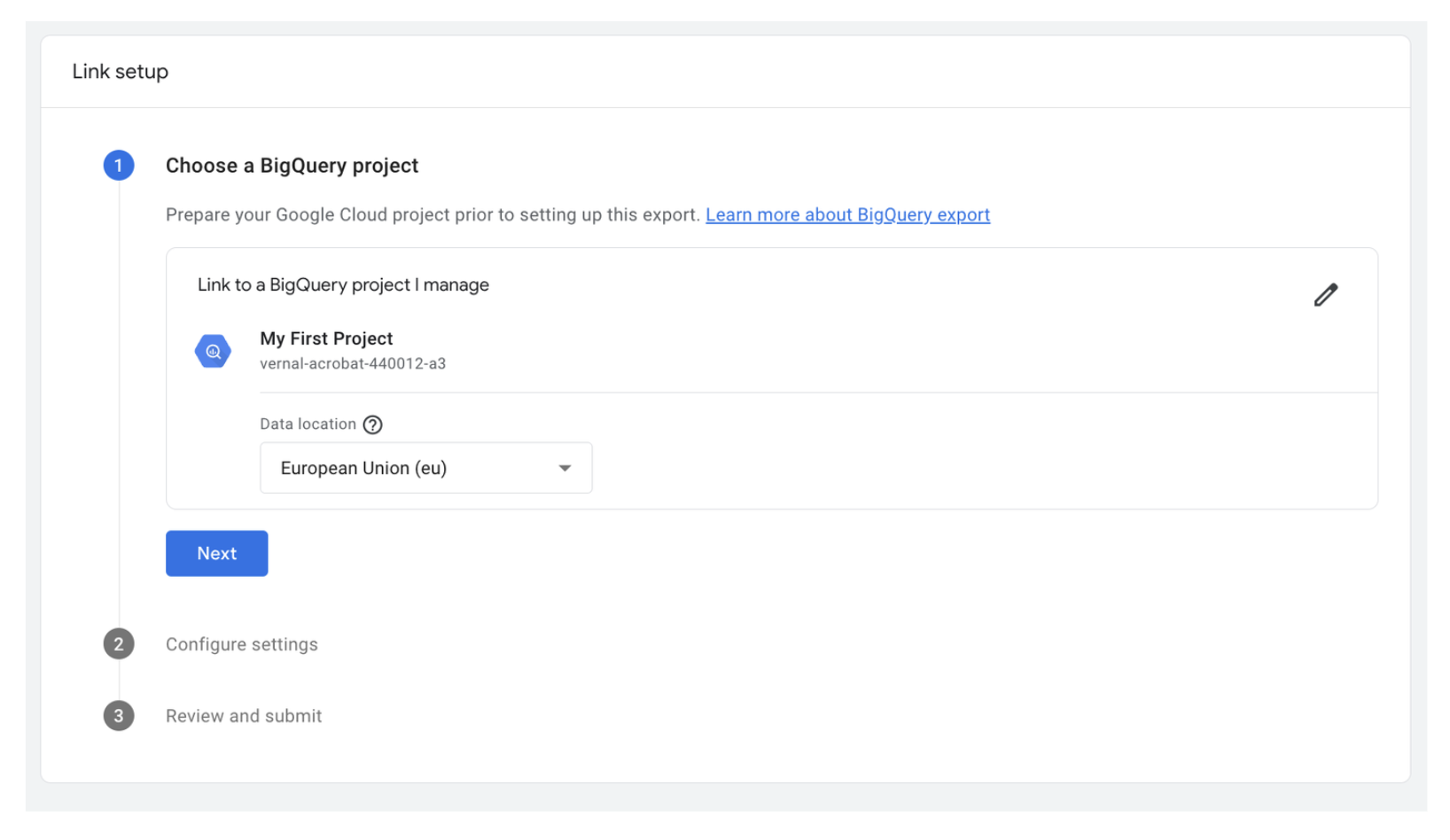

You will then need to select a BigQuery Project you manage and pick a location.

Which project do I pick?

You can either select an existing project or create one yourself. See previous section on setting up Google Cloud.

What do I put for Location?

If you already have a BigQuery account and existing datasets, then put it in the same region as them.

If you don’t, then just pick the region that your business is based in.

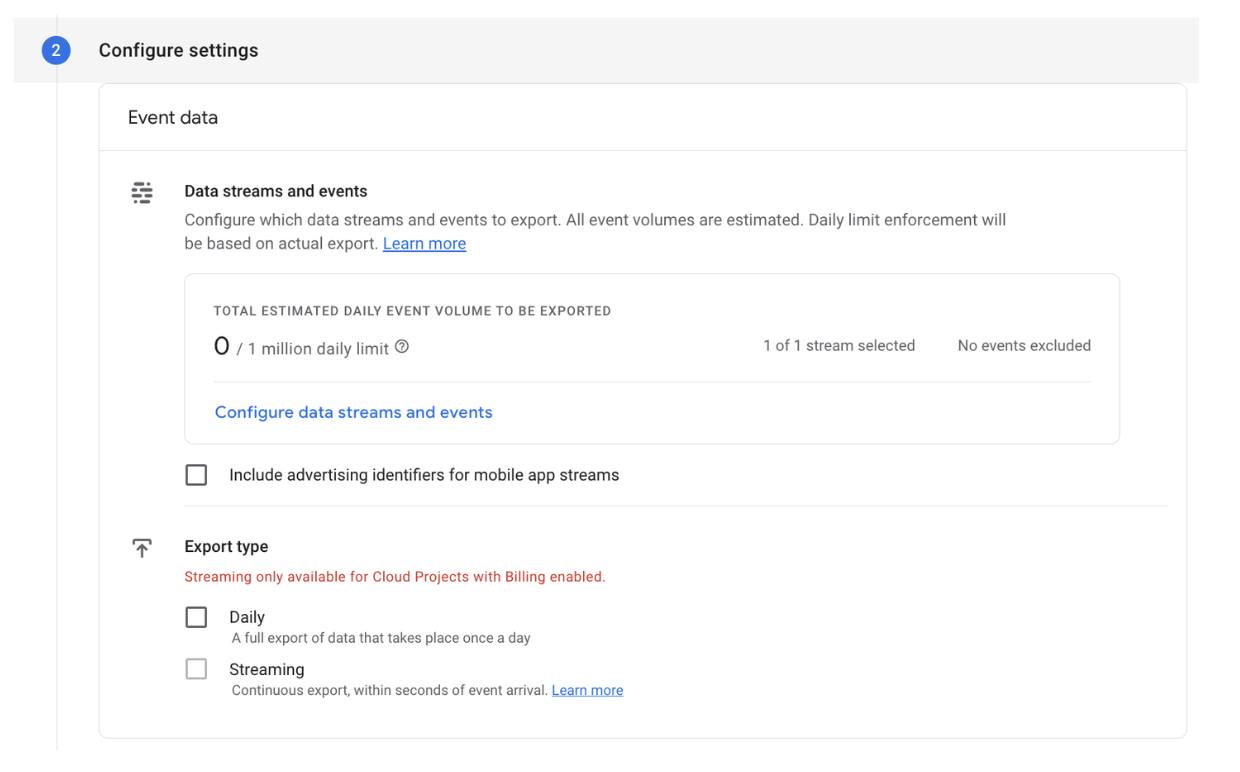

What should I do for data steams and events?

You can configure the data streams and events to exclude specific events if you want.

We recommend just picking the main stream and not excluding anything. There are some edge cases where you might want to do something different. We cover that in this blog post.

What export type should I pick?

The main decision is between the Daily Export & Steaming Export

What’s the difference?

- Daily Export - This is exports the GA4 data once a day. Limit of 1 million events daily.

- Steaming Export - This exports the GA4 continuously. There is no limit on this.

GA4 will basically tell you how close you are to the daily limit.

If you’re getting to 800 - 900k daily events and still growing:

- Turn on streaming

- And turn on daily.

If you’re lower than that:

- Just turn on daily.

There is currently a nasty bug with the streaming export where sometimes it will not export all of your events.

There is no brilliant solution for this at the moment. Please check out our blog post for more information.

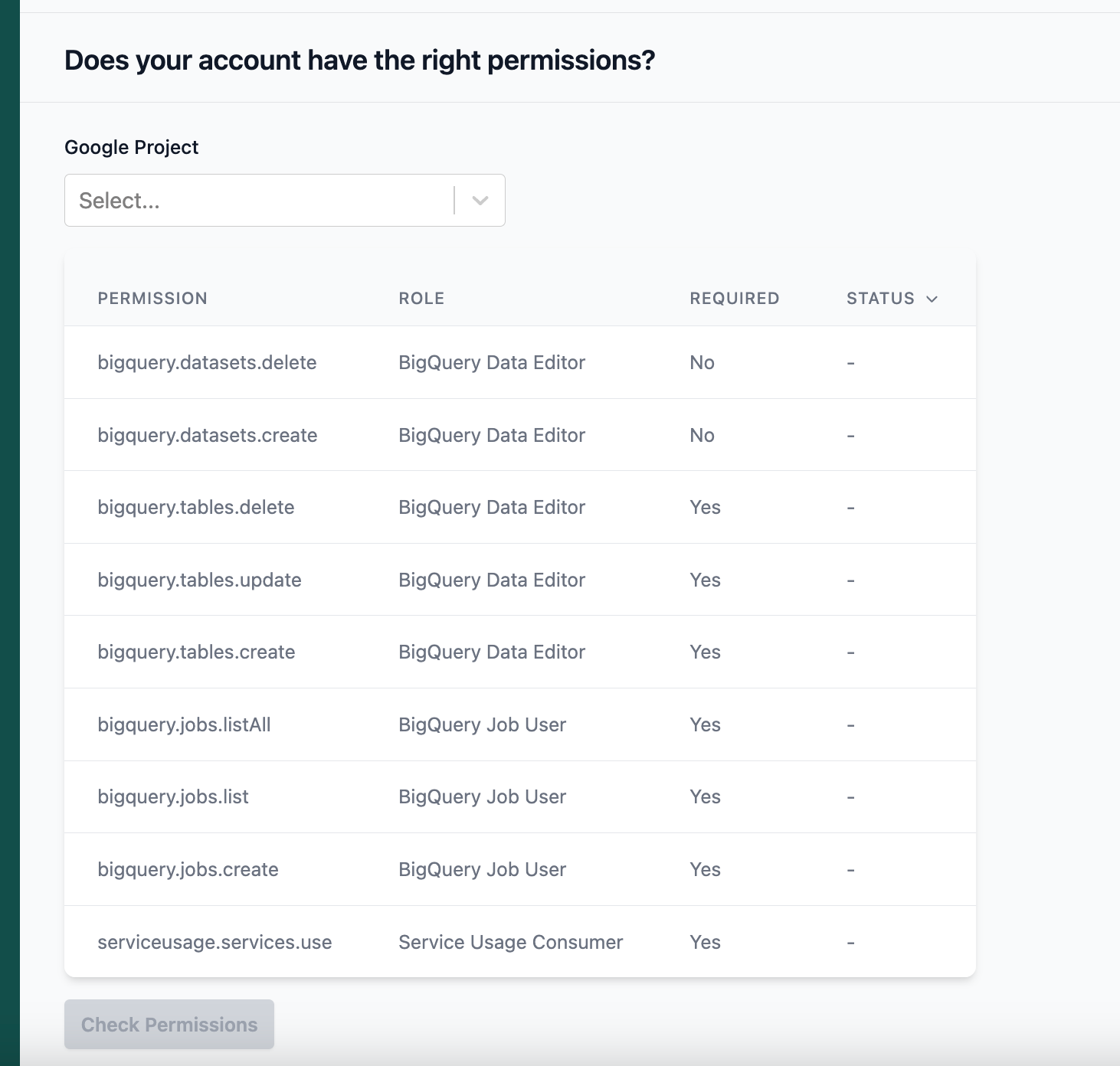

Step 3: Sharing permissions in Google Cloud

For Pipeline to work, we need to have the right level of permission for the Pipeline to run.

You can check that on our permissions page.

How to check this

- Open the link above.

- Share permissions with Pipeline.

- Select the project you're going to use in the drop down and hit [Check Permissions]

- The table will then tell you if you have the correct permissions.

If you don't you're going to need to go and get them. The easiest way to do this is to add roles to the user using Pipeline.

The roles are shown in the table, but we'll also go through them here. We're going to talk through two different permissions set-up. The tradeoff is ease of set-up vs how locked down your permissions are.

Simple: The easiest permissions set-up

Give the email you use with Pipeline the following roles at project level:

- BigQuery Job User

- ServiceUsageConsumer

- Why do we need this?

- In order to list datasets available the API will try to access the project first to list the resources and that requires this permission. (It baffled us too at first!)

- Why do we need this?

- BigQuery Data Owner

Complex: Lock down your datasets

We can choose here to lock down our datasets. In this case rather than the user having access to all of your datasets we're going to only provide permissions to specific datasets.

In this case you'll have to manually do some of the BigQuery set-up that Pipeline does for you. Specifically you'll need to create datasets.

You'll need to create:

- A dataset where we'll put the Pipeline.

- A dataset where we'll store the Pipeline raw data.

They need to have names in the following format:

{dataset_name}{dataset_name}_pl_raw

And be in the same region.

Then for each of those datasets you'll need to give your user the role:

- BigQuery Data Editor

Or they won't show in the interface.